A brief story about yet another long struggle with Python and wrapping my head around it vs. what I used know about Perl, and what I know about Java and JavaScript. This serves as my final post for 2017, and hoping for more time to blog in 2018!

Lambda and Python

I like setting up my environments so that they work as reasonably close to the target platform as much as possible. When I was developing AWS Lambda in Java and Javascript, this was fairly straightfoward. For my Java projects, I typically used Maven to manage dependencies. Similarly, with Javascript, I would use NPM to manage those dependences. In either case, I would end up with either a jar or zip file that were nice and neat packages to send to AWS.

Yes, AWS gives guidance on how to package Python for Lambda deployment. This is good and well, but who wants to drop all of their dependencies in the same directory as your code. I don’t! I prefer a “lib” or “vend” or some other subdirectory.

However, this story isn’t about that. It’s about the journey of getting to the point where I can even complain about having my dependencies in a sub folder.

Serverless and Python

On my development system, I use Ubuntu 16.04 as my primary operating system. By default, this includes Python 3.5. This is fine for most things. Where this becomes a problem is when other tools enforce other version requirements…

I like using the Serverless Framework to manage the development of my Lambda functions. I’ve been using it since pre-1.0 (yes, including needing to rewrite when they made some major direction changes during their 1.0 alpha!). It does a decent job and helps to keep me honest and fits into my overall workflow.

That being said, it (and AWS) wants Python 3.6. So much so that Serverless aborts when attempting to use Python 3.5.

So yeah. Seriously frustrating. These things just “sort of work” with Java and Javascript…

Hand to Hand Combat

Googling around pointed at several options. Many pointed directly at upgrading the system Python to 3.6. Having completely trashed operating systems before by doing such foolhardy things, I hardly felt that this was the correct choice. However, I did run across an AskUbuntu suggestion for using pyenv to manage multiple Python installations. This seemed more my speed.

If you have used something like Node Version Manager (NVM), you will be somewhat familiar with the premise of pyenv. Succinctly, pyenv is a tool that can be used to manage various Python installations. It also includes some plugins that handle extended features like handling Python “virtual environments” across the multiple installed versions.

Setting up pyenv

Setting up pyenv is pretty straightforward. It installs and runs locally under your account. However, there are some dependencies you’ll need to install in order to compile other verisons of Python.

|

|

After the files are installed, you append this to the bottom of your .bashrc:

|

|

Logout and log back in for the changes to take effect and you are good to go.

Getting to Python 3.6

Letting pyenv build Python is also fairly simple.

|

|

That installed version 3.6.4, which was current at the time of writing. The following two commands make use of a pyenv plugin called pyenv-virtualenv to configure a virtualenv for the newly installed Python. It also sets it to be the default virtualenv whenever you spawn new shells.

|

|

This created the “general” virtualenv. Feel free to call it whatever you like.

|

|

This sets the default virtualenv to general.

Where it went wrong…

I was super excited. Now I had Python 3.6.4 and therefore ready to start working on some Lambda calls in Python. Or so I thought…

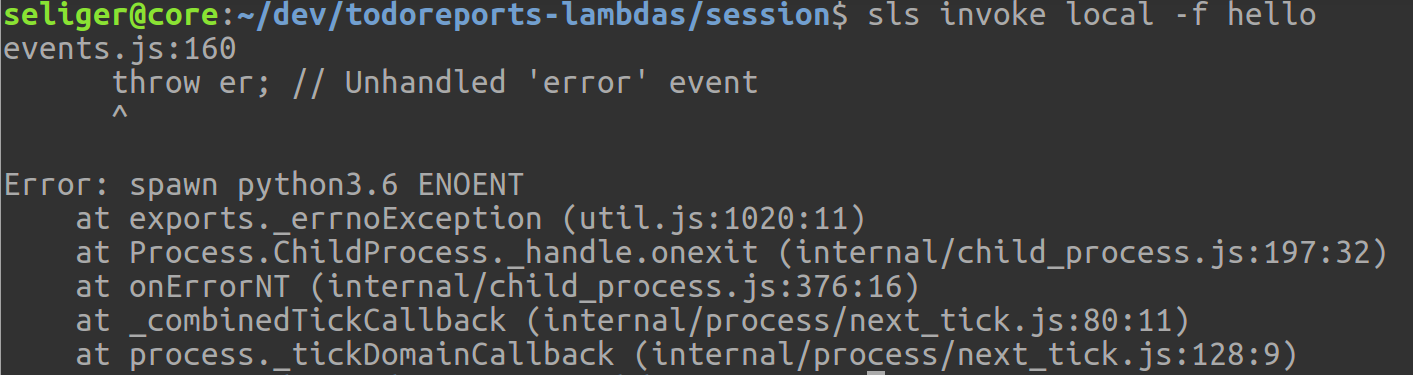

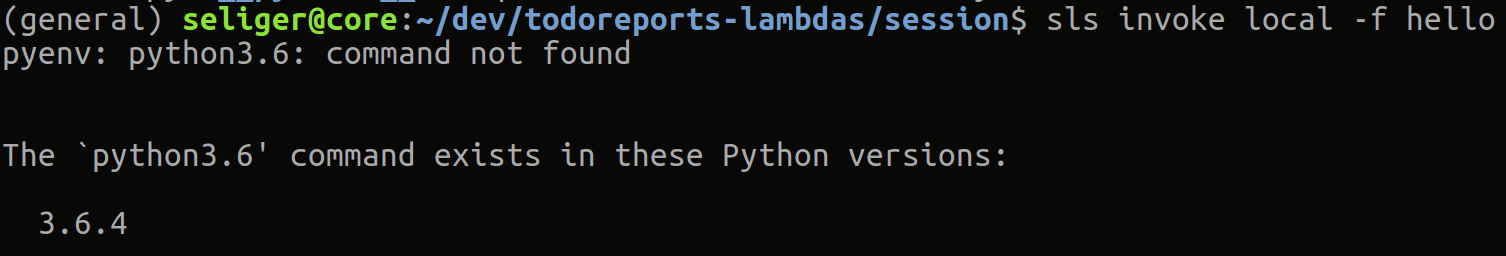

What insanity is this? I just installed seemingly installed 3.6.4, and the error is seemingly pointing in that general direction. So what gives?

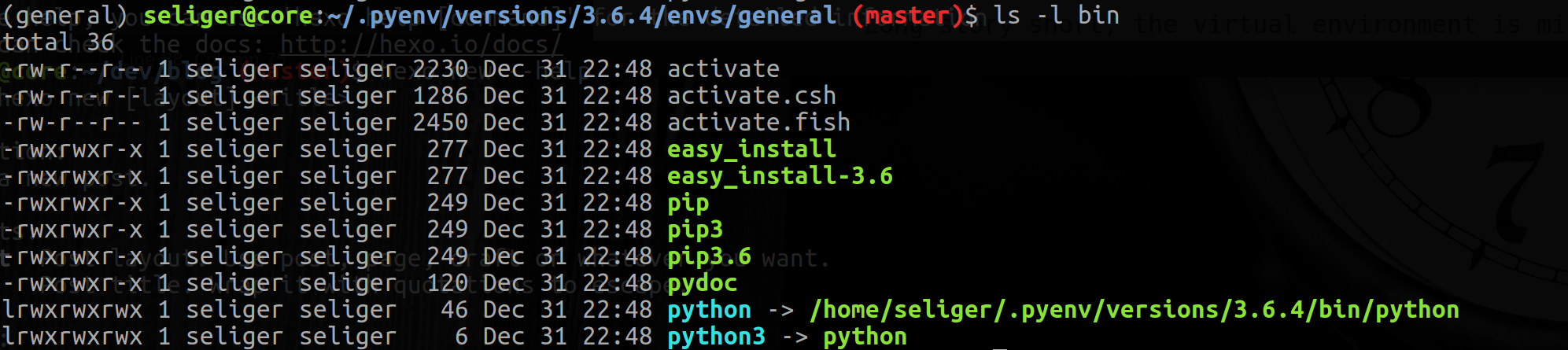

Long story short, the virtual environment is missing the python3.6 binary.

You’ll see that binaries like pip3.6 appear, but not python3.6. Serverless counts on the python3.6 binary as it wants to enforce version compatibility between local invocations and when the functions live in AWS.

Are you kidding me?!?

Now what?

At this point I’m really thinking to myself, “Had I just done this in Java or Javascript, I’d be done by now…” However, I want to improve my Python knowledge and use the same language that my favorite to do list manager Todoist is using, since I am writing code that provides some desired functionality using their APIs.

So here we are at a crossroads…

Really digging in…

Apparently I wasn’t the only one to notice this. On GitHub, in the pyenv-virtualenv repo, the following issue exist:

python -m venvdoes not create python3.X symlink (but using python3.6 does)- potentially missing symlink: include/python3.6.m

- Tox can’t find python3.x executable in virtualenv

I spent some time working with the thread in #206 as it seemed to be closest related. I dug into their code as well as the code for venv, the Python 3 way of generating virtual environments.

Finding the Problem

Reading the code for venv, it seemed odd that they would generate (link) binaries such as python and python3, but not python3.X. With that, I dug around in Python’s bug database. The behavior seemed incongruous with what the legacy virtualenv and conda would do. With that, I opened a bug:

The Response

The Python folks were quite zippy in their response. Apparently this is expected behavior. Basically, if you call -m venv using the python3 command, you don’t get a python3.Y binary. However, if you call -m venv with the python3.6 binary, you DO get all the binary.

Seems “off” to me, but they confirmed that this is the intended behavior.

On the plus side, the Python developer who responded did indicate that the documentation is unclear (read: completely fails to mention) the nuances with how the venv module is called. So the ticket has been renamed and will now work to resolve the documentation issue.

The Workaround

So I submitted this PR that works around the issue by having pyenv-virutualenv call -m venv with the “fully qualified” binary (in this case python3.6).

Thus far, the PR sits, yet to be applied. Honestly, I’m not sure it’s the best solution, given that Python’s expected behavior is to behave differently based on how it is called. Is it really pyenv-virtualenv’s right to force the end user’s hand on this? For my use case yes, but what about other use cases I’m not considering?

Conclusion

I’m still not thrilled with the Python ecosystem. It is uneven and working within its constructs are difficult at best. I know people rave over it, but I can do the same things in Java and Javascript without the pomp and circumstance. It’s disappointing.

However, I’m not going to let this get to me. I’m going to press on. I’m going to continue to push for a proper resolution to the pyenv-virtualenv situation and contribute where I can to push the community in the right direction.

In the meantime, if you have the need for your pyenv-virtualenv to generate the proper binaries, clone my fork of pytenv-virtualenv into your ~/.pyenv/plugins sub directory.

I hope that you have a prosperous 2018!! Thanks for reading!